|

Yushi Huang Ph.D. candidate @ HKUST |

|

|

I am a 1st-year Ph.D. student at the Hong Kong University of Science and Technology (HKUST), supervised by Prof. Jun Zhang. I received my B.E. degree from Beihang University. I also work as a research intern at SenseTime Research, closely with Dr. Ruihao Gong. Previously, I have interned at Microsoft Research Asia and SenseTime Research. My research interest focuses on efficient vision and language generative models.

News

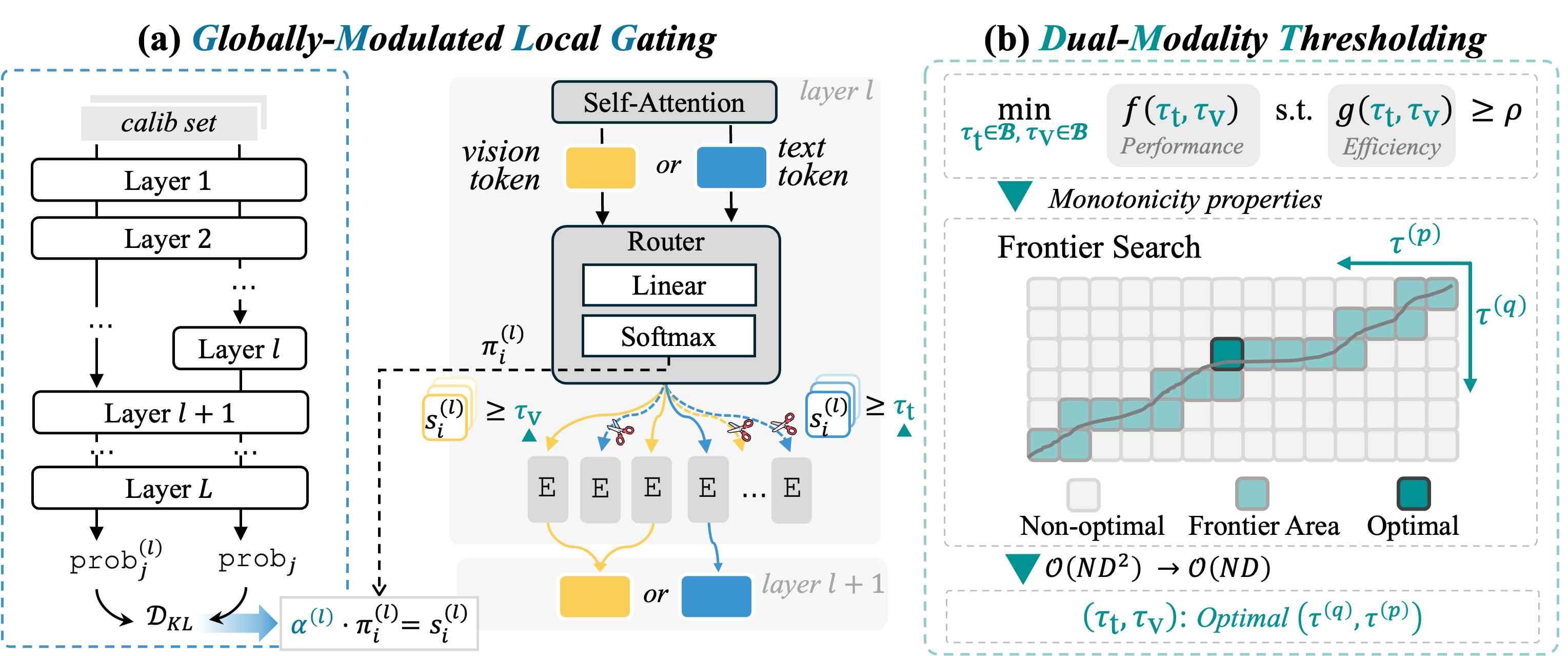

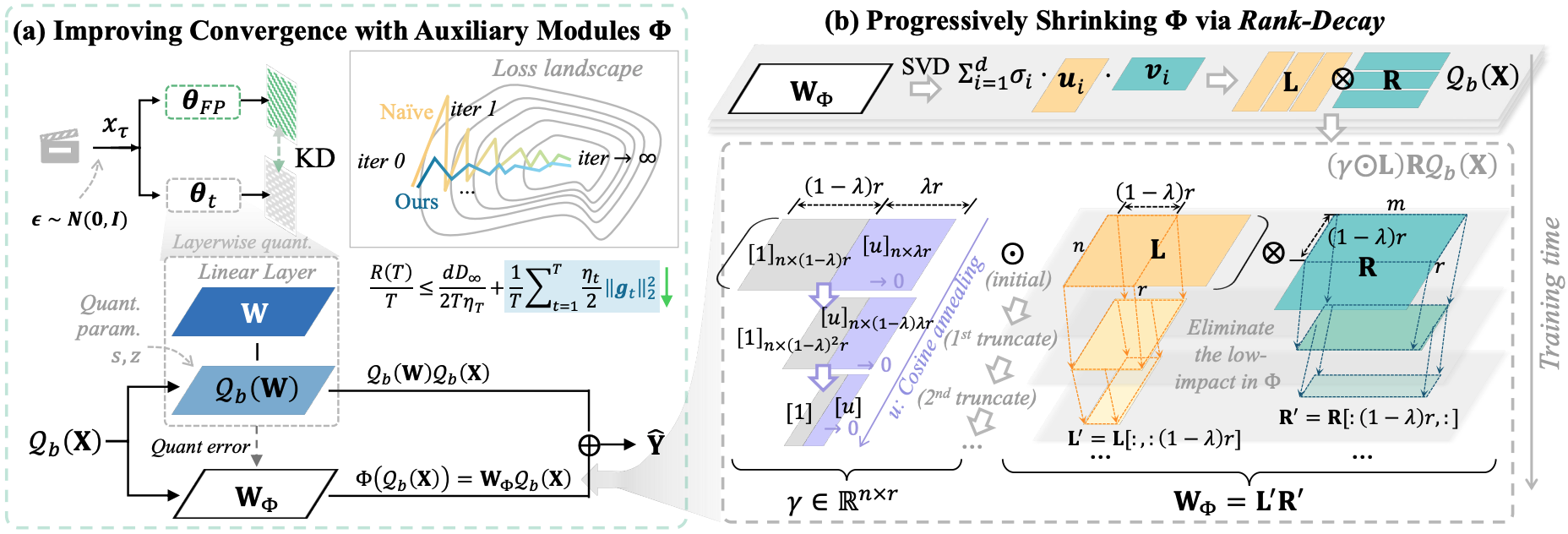

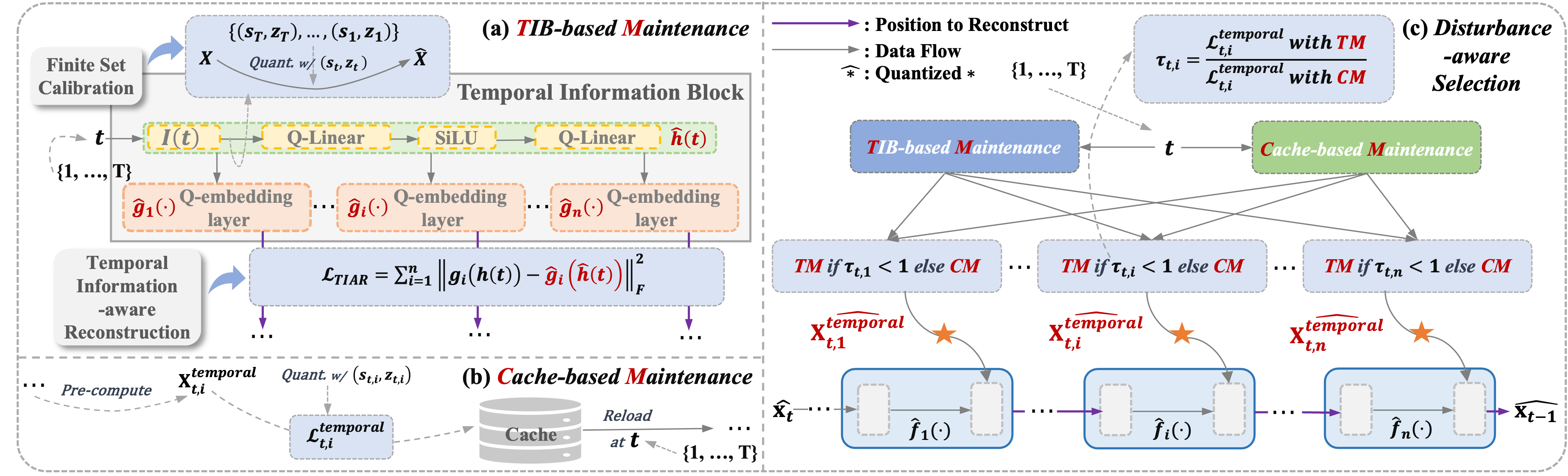

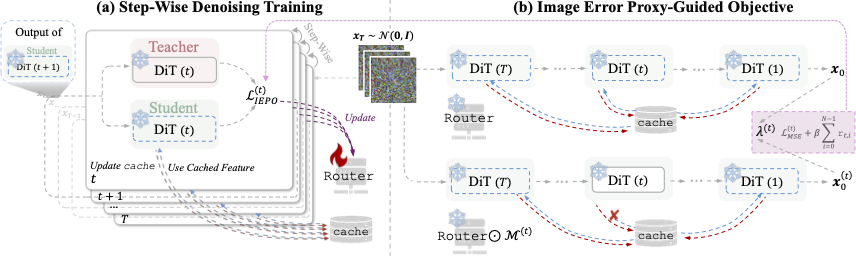

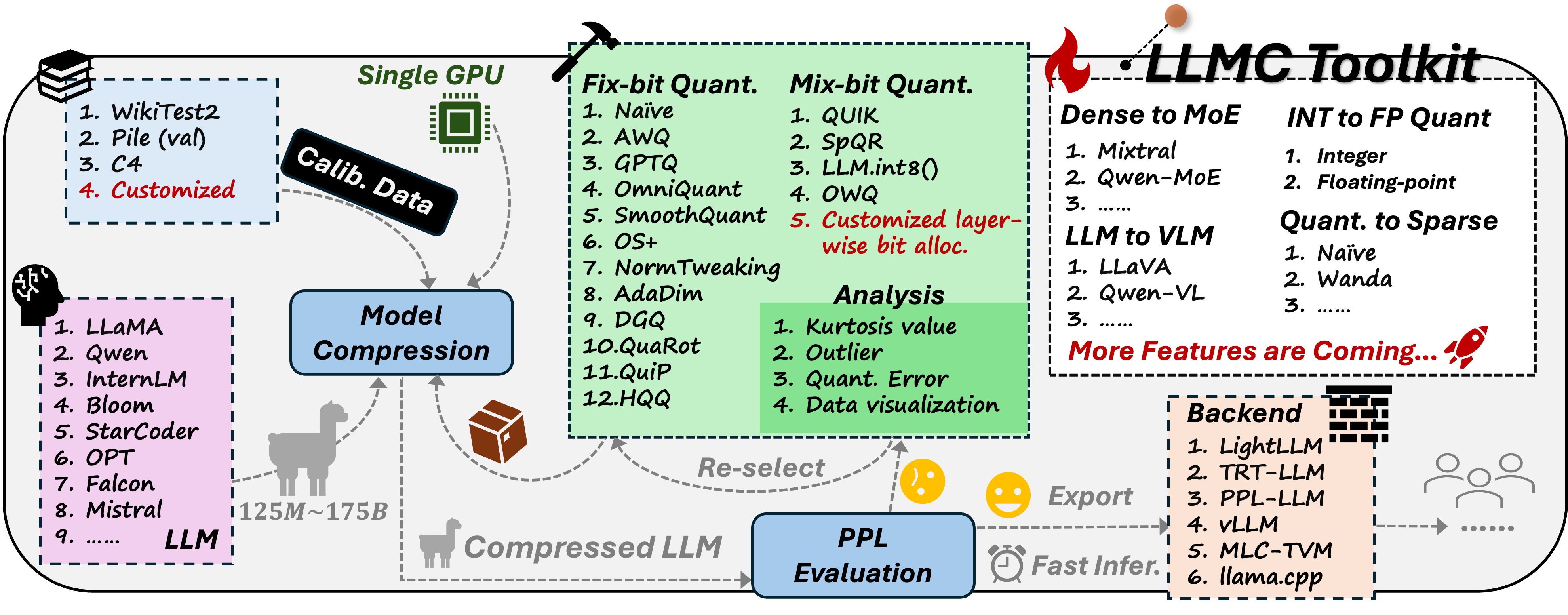

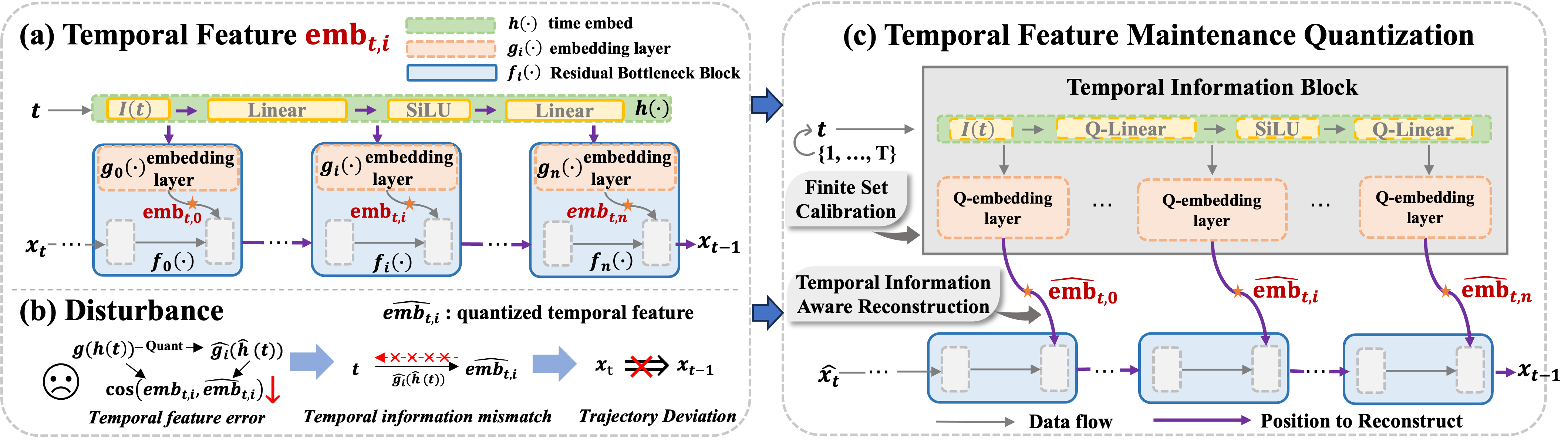

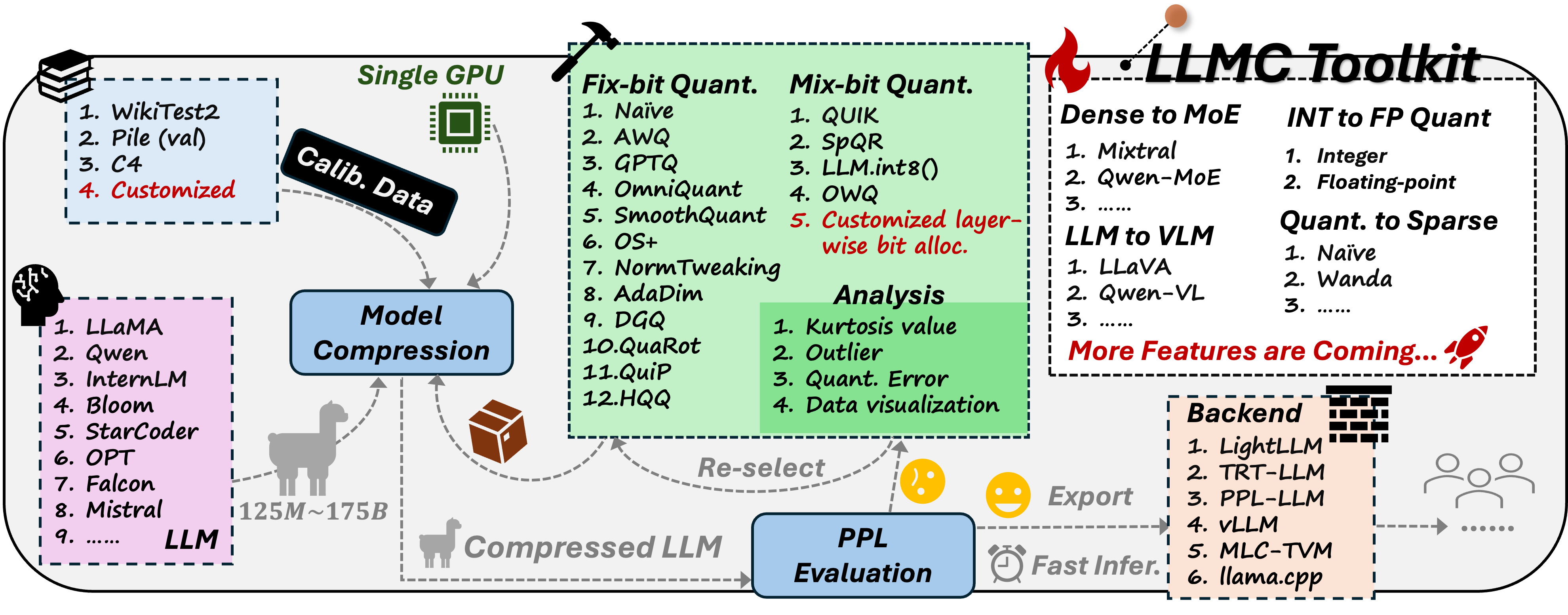

Selected PapersIncludes preprints; * indicates equal contribution, 📧 indicates corresponding author Preprint  MoDES: Accelerating Mixture-of-Experts Multimodal Large Language Models via Dynamic Expert Skipping Yushi Huang, Zining Wang, Zhihang Yuan📧, Yifu Ding, Ruihao Gong, Jinyang Guo, Xianglong Liu, Jun Zhang📧 ICLR 2026  QVGen: Pushing the Limit of Quantized Video Generative Models Yushi Huang, Ruihao Gong📧, Jing Liu, Yifu Ding, Chengtao Lv, Haotong Qin, Jun Zhang📧 TPAMI 2025  Temporal Feature Matters: A Framework for Diffusion Model Quantization Yushi Huang, Ruihao Gong, Xianglong Liu📧, Jing Liu, Yuhang Li, Jiwen Lu, Dacheng Tao ICML 2025  Yushi Huang*, Zining Wang*, Ruihao Gong📧, Jing Liu, Xinjie Zhang, Jinyang Guo, Xianglong Liu, Jun Zhang📧 EMNLP 2024 Industry Track  LLMC: Benchmarking Large Language Model Quantization with a Versatile Compression Toolkit Ruihao Gong*, Yang Yong*, Shiqiao Gu*, Yushi Huang*, Chengtao Lv, Yunchen Zhang, Dacheng Tao, Xianglong Liu📧 CVPR 2024 Highlight  TFMQ-DM: Temporal Feature Maintenance Quantization for Diffusion Models Yushi Huang*, Ruihao Gong*, Jing Liu, Tianlong Chen, Xianglong Liu📧

ProjectsToolkit  LightCompress is an off-the-shelf compression suite for AIGC models (LLMs, VLMs, diffusion, etc.) that packages SOTA quantization, sparsification, and deployment best practices to shrink models while preserving accuracy. 600+ GitHub Stars.

Services

Educations

Internships

|