|

Yushi Huang Ph.D. candidate @ HKUST |

|

|

I am a Ph.D. student at the Hong Kong University of Science and Technology (HKUST), supervised by Prof. Jun Zhang. I received my B.E. degree from Beihang University. Currently, I am interested in multimodal reinforcement learning (e.g., VLMs and diffusion models). Moreover, I focus on efficient training and inference for vision and language generative models.

News

Selected PapersIncludes preprints; * indicates equal contribution, 📧 indicates corresponding author CVPR 2026  MoDES: Accelerating Mixture-of-Experts Multimodal Large Language Models via Dynamic Expert Skipping Yushi Huang, Zining Wang, Zhihang Yuan📧, Yifu Ding, Ruihao Gong, Jinyang Guo, Xianglong Liu, Jun Zhang📧 CVPR 2026  LinVideo: A Post-Training Framework towards $\mathcal{O}(n)$ Attention in Efficient Video Generation Yushi Huang, Xingtong Ge, Ruihao Gong📧, Chengtao Lv, Jun Zhang📧 ICLR 2026  QVGen: Pushing the Limit of Quantized Video Generative Models Yushi Huang, Ruihao Gong📧, Jing Liu, Yifu Ding, Chengtao Lv, Haotong Qin, Jun Zhang📧 ICML 2025  Yushi Huang*, Zining Wang*, Ruihao Gong📧, Jing Liu, Xinjie Zhang, Jinyang Guo, Xianglong Liu, Jun Zhang📧

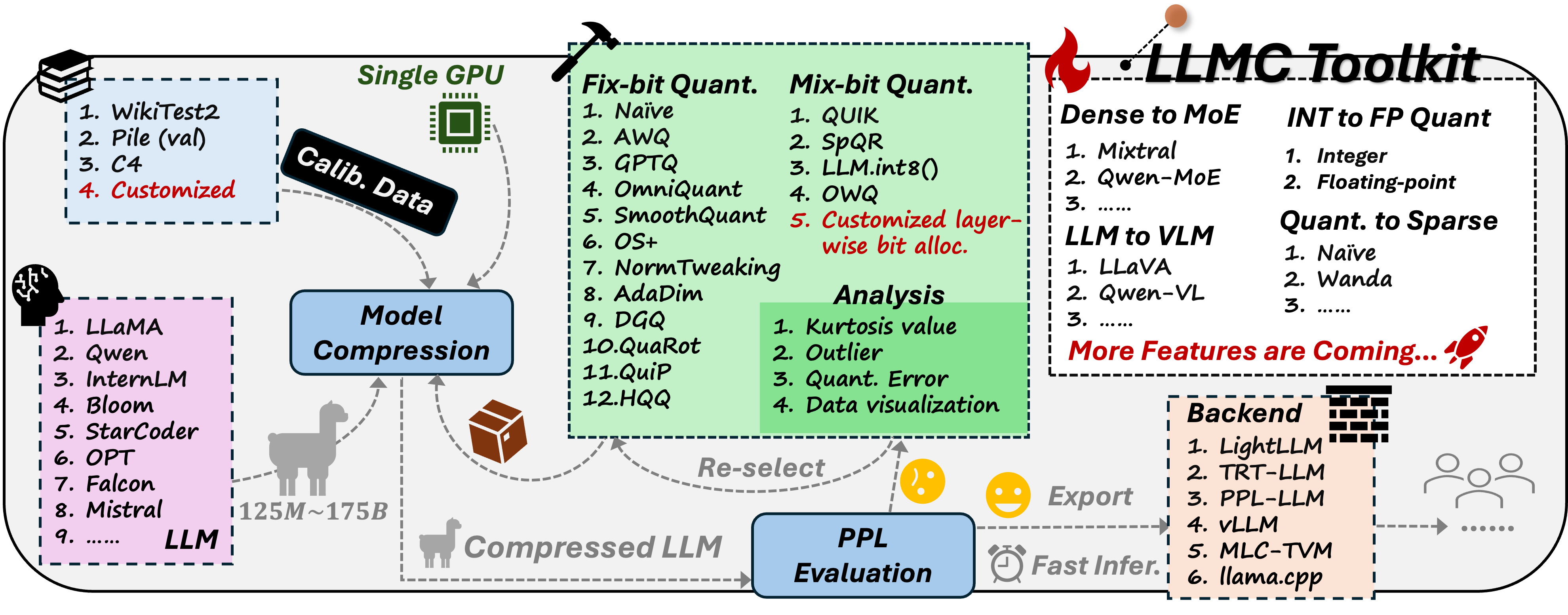

ProjectsToolkit  LightCompress is an off-the-shelf compression suite for AIGC models (LLMs, VLMs, diffusion, etc.) that packages SOTA quantization, sparsification, and deployment best practices to shrink models while preserving accuracy. 600+ GitHub Stars.

Services

Educations

Internships

|